Image courtesy of Phil Wheeler Illustrations

Can you learn to see the world differently? Some people already do. People with synaesthesia experience the world very differently indeed, in a way that seems linked to creativity, and which can shed light on some of the deepest mysteries of consciousness. In a paper published in Scientific Reports, we describe new evidence suggesting that non-synaesthetes can be trained to experience the world much like natural synaesthetes. Our results have important implications for understanding individual differences in conscious experiences, and they extend what we know about the flexibility (‘plasticity’) of perception.

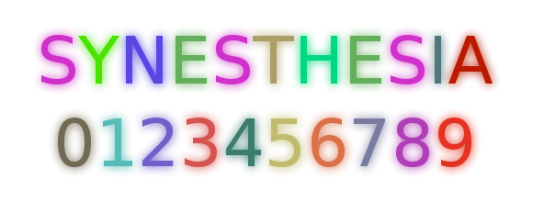

Synaesthesia means that an experience of one kind (like seeing a letter) consistently and automatically evokes an experience of another kind (like seeing a colour), when the normal kind of sensory stimulation for the additional experience (the colour) isn’t there. This example describes grapheme-colour synaesthesia, but this is just one among many fascinating varieties. Other synaesthetes experience numbers as having particular spatial relationships (spatial form synaesthesia, probably the most common of all). And there are other more unusual varieties like mirror-touch synaesthesia, where people experience touch on their own bodies when they see someone else being touched, and taste-shape synaesthesia, where triangles might taste sharp, and ellipses bitter.

The richly associative nature of synaesthesia, and the biographies of famous case studies like Vladimir Nabokov and Wassily Kandinsky (or, as the Daily Wail preferred: Lady Gaga and Pharrell Williams), has fuelled its association with creativity and intelligence. Yet the condition is remarkably common, with recent estimates suggesting about 1 in 23 people have some form of synaesthesia. But how does it come about? Is it in your genes, or is it something you can learn?

It is widely believed that Kandinsky was synaesthetic. For instance he said: “Colour is the keyboard, the eyes are the harmonies, the soul is the piano with many strings. The artist is the hand that plays, touching one key or another, to cause vibrations in the soul”

As with most biological traits the truth is: a bit of both. But this still begs the question of whether being synaesthetic is something that can be learnt, even as an adult.

There is a rather long history of attempts to train people to be synaesthetic. Perhaps the earliest example was by E.L. Kelly who in 1934 published a paper with the title: An experimental attempt to produce artificial chromaesthesia by the technique of the conditioned response. While this attempt failed (the paper says it is “a report of purely negative experimental findings”) things have now moved on.

More recent attempts, for instance the excellent work of Olympia Colizoli and colleagues in Amsterdam, have tried to mimic (grapheme-colour) synaesthesia by having people read books in which some of the letters are always coloured in with particular colours. They found that it was possible to train people to display some of the characteristics of synaesthesia, like being slower to name coloured letters when they were presented in a colour conflicting with the training (the ‘synaesthetic Stroop’ effect). But crucially, until now no study has found that training could lead to people actually reporting synaesthesia-like conscious experiences.

An extract from the ‘coloured reading’ training material, used in our study, and similar to the material used by Colizoli and colleagues. The text is from James Joyce. Later in training we replaced some of the letters with (appropriately) coloured blocks to make the task even harder.

Our approach was based on brute force. We decided to dramatically increase the length and rigour of the training procedure that our (initially non-synaesthetic) volunteers undertook. Each of them (14 in all) came in to the lab for half-an-hour each day, five days a week, for nine weeks! On each visit they completed a selection of training exercises designed to cement specific associations between letters and colours. Crucially, we adapted the difficulty of the tasks to each volunteer and each training session, and we also gave them financial rewards for good performance. Over the nine-week regime, some of the easier tasks were dropped entirely, and other more difficult tasks were introduced. Our volunteers also had homework to do, like reading the coloured books. Our idea was that the training must always be challenging, in order to have a chance of working.

The results were striking. At the end of the nine-week exercise, our dedicated volunteers were tested for behavioural signs of synaesthesia, and – crucially – were also asked about their experiences, both inside and outside the lab. Behaviourally they all showed strong similarities with natural-born synaesthetes. This was most striking in measures of ‘consistency’, a test which requires repeated selection of the colour associated with a particular letter, from a palette of millions.

The consistency test for synaesthesia. This example from David Eagleman’s popular ‘synaesthesia battery’.

Natural-born synaesthetes show very high consistency: the colours they pick (for a given letter) are very close to each other in colour space, across repeated selections. This is important because consistency is very hard to fake. The idea is that synaesthetes can simply match a colour to their experienced ‘concurrent’, whereas non-synaesthetes have to rely on less reliable visual memory, or other strategies.

Our trained quasi-synaesthetes passed the consistency test with flying colours (so to speak). They also performed much like natural synaesthetes on a whole range of other behavioural tests, including synaesthetic stroop, and a ‘synaesthetic conditioning’ task which shows that trained colours can elicit automatic physiological responses, like increases in skin conductance. Most importantly, most (8/14) of our volunteers described colour experiences much like those of natural synaesthetes (only 2 reported no colour phenomenology at all). Strikingly, some of these experience took place even outside the lab:

“When I was walking into campus I glanced at the University of Sussex sign and the letters were coloured” [according to their trained associations]

Like natural synaesthetes, some of our volunteers seemed to experience the concurrent colour ‘out in the world’ while others experienced the colours more ‘in the head’:

“When I am looking at a letter I see them in the trained colours”

“When I look at the letter ‘p’ … its like the inside of my head is pink”

For grapheme colour synaesthetes, letters evoke specific colour experiences. Most of our trained quasi-synaesthetes reported similar experiences. This image is however quite misleading. Synaesthetes (natural born or not) also see the letters in their actual colour, and they typically know that the synaesthetic colour is not ‘real’. But that’s another story.

These results are very exciting, suggesting for the first time that with sufficient training, people can actually learn to see the world differently. Of course, since they are based on subjective reports about conscious experiences, they are also the hardest to independently verify. There is always the slight worry that our volunteers said what they thought we wanted to hear. Against this worry, we were careful to ensure that none of our volunteers knew the study was about synaesthesia (and on debrief, none of them did!). Also, similar ‘demand characteristic’ concerns could have affected other synaesthesia training studies, yet none of these led to descriptions of synaesthesia-like experiences.

Our results weren’t just about synaesthesia. A fascinating side effect was that our volunteers registered a dramatic increase in IQ, gaining an average of about 12 IQ points (compared to a control group which didn’t undergo training). We don’t yet know whether this increase was due to the specifically synaesthetic aspects of our regime, or just intensive cognitive training in general. Either way, our findings provide support for the idea that carefully designed cognitive training could enhance normal cognition, or even help remedy cognitive deficits or decline. More research is needed on these important questions.

What happened in the brain as a result of our training? The short answer is: we don’t know, yet. While in this study we didn’t look at the brain, other studies have found changes in the brain after similar kinds of training. This makes sense: changes in behaviour or in perception should be accompanied by neural changes of some kind. At the same time, natural-born synaesthetes appear to have differences both in the structure of their brains, and in their activity patterns. We are now eager to see what kind of neural signatures underlie the outcome of our training paradigm. The hope is, that because our study showed actual changes in perceptual experience, analysis of these signatures will shed new light on the brain basis of consciousness itself.

So, yes, you can learn to see the world differently. To me, the most important aspect of this work is that it emphasizes that each of us inhabits our own distinctive conscious world. It may be tempting to think that while different people – maybe other cultures – have different beliefs and ways of thinking, still we all see the same external reality. But synaesthesia, along with other emerging theories of ‘predictive processing’ – shows that the differences go much deeper. We each inhabit our own personalised universe, albeit one which is partly defined and shaped by other people. So next time you think someone is off in their own little world: they are.

The work described here was led by Daniel Bor and Nicolas Rothen, and is just one part of an energetic inquiry into synaesthesia taking place at Sussex University and the Sackler Centre for Consciousness Science. With Jamie Ward and (recently) Julia Simner also working here, we have a uniquely concentrated expertise in this fascinating area. In other related work I have been interested in why synaesthetic experiences lack a sense of reality and how this give an important clue about the nature of ‘perceptual presence’. I’ve also been working on the phenomenology of spatial form synaesthesia, and whether synaesthetic experiences can be induced through hypnosis. And an exciting brain imaging study of natural synaesthetes will shortly hit the press! Nicolas Rothen is an authority on the relationship between synaesthesia and memory, and Jamie Ward and Julia Simner have way too many accomplishments in this field to mention. (OK, Jamie has written the most influential review paper in the area – featuring a lot of his own work – and Julia (with Ed Hubbard) has written the leading textbook. That’s not bad to start with.)

Our paper, Adults can be Trained to Acquire Synesthetic Experiences (sorry for US spelling) is published (open access, free!) in Scientific Reports, part of the Nature family. The authors were Daniel Bor, Nicolas Rothen, David Schwartzman, Stephanie Clayton, and Anil K. Seth. There has been quite a lot of media coverage of this work, for instance in the New Scientist and the Daily Fail. Other coverage is summarized here.